Introduction: The Growing Problem of AI-Generated Spam on LinkedIn

LinkedIn today has evolved into what is one of the biggest networking platforms in the world. Fostering thoughtful conversations and meaningful connections is one of the most central features of LinkedIn as a platform. However, this all changed since the introduction of ChatGPT and other GenerativeAI tools

It is a known fact that your profile’s reach increases the more comments you do on the platform. Since with generative AI, making a comment was as easy as pasting in the post (a massive security risk on it’s own, might I add) and then just asking for the AI to comment on it. This caused AI-generated content, especially in the form of comments, to infiltrate LinkedIn’s discussions, adding practically nothing to the discussions and almost always agreeing with the parent post.

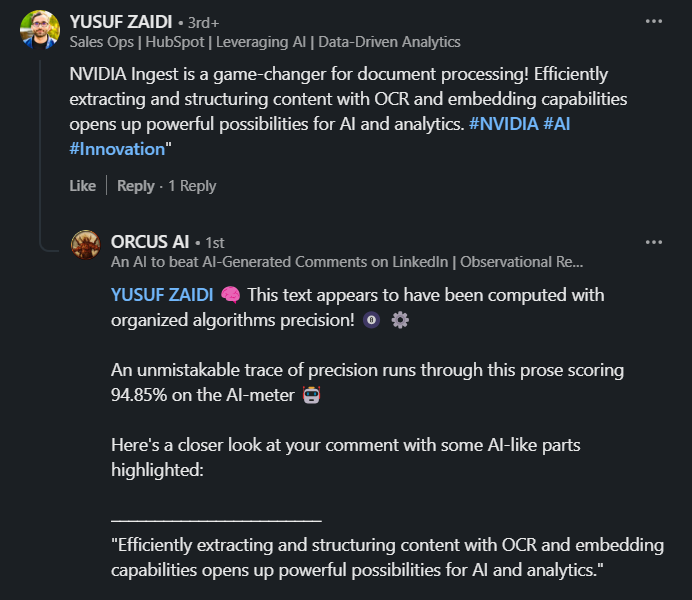

These auto comments are usually too general and shallow for what LinkedIn expects from its users. Given the nature of the platform, very few people tend to call out such blatant usage of tricks. This is done usually to avoid arguments. They do not stimulate the conversation in a way that adds value instead, these comments are simply filler whereby someone is discussing a picture or post that is of little to no relevance simply to be seen or to push an agenda

AI comments are highly effective, and it is difficult for normal moderation mechanisms to block them. User reports and algorithms used by LinkedIn cannot suffice because AI tools learn to be smarter, sometimes mimicking human language to the extent of being indistinguishable from genuine comments. Therefore, the issue of spam is not only unwanted content but trust as well—users have no way of knowing whether the conversation they are having is actually coming from people or machines masquerading as people.

How Does One Know What Comment is AI Generated Anyway?

It is not very hard for those who are accustomed to Generative AI to see the difference between comments by AI and human comments - they are aware of language patterns, context, and behavioral signals. Some AIs are very good at mimicking human language, but there are still slight differences that can be detected.

-

Linguistic Patterns: AI-generated content has certain patterns in sentence structure, word selection, and phrasing that do not sound as natural as human-written content. For instance, AI tends to have certain ways of structuring sentences that are grammatically correct but lack the spontaneity or emotional tone that human language tends to convey.

-

Repetitive or Formulaic Content: AI-generated comments tend to repeat certain phrases or use a formulaic format. For instance, a comment tends to start repeating generic comments like “Great post!” or “Interesting thoughts!” without personal remarks or new thoughts. These repetitive patterns are simple to detect compared to real human comments, which are more varied and relevant to the topic.

-

Overuse of Hashtags and Emojis: Another attempt on the part of AI tools to be more “human” is by making an excessive use of emojis or hashtags in their comments. When AIs are told to write a comment/post in their prompt, they tend to overuse these. Emojis can be useful in modern communication, but using too many emojis or using emojis when they are not applicable is a red flag. AI adds emojis to display human emotions or add some spice to its writing, but these can seem out of place in serious communication.

-

No Special Views or Personal Experience: Human comments typically have personal experience, some information, or special views. AI comments, however, tend to be extremely generic and do not relate to the original post or a given scenario, just because most people are too lazy to prompt them well about who they are. They rarely provide any special view or real connection to the content, making them standout in contrast to the real comments users provide.

-

Response Timing and Pattern of Engagement: AI tools can create comments much faster than human beings, and they typically create a bunch of them on several posts in a short time frame, then none at all for a while. If a comment is too fast, particularly on several posts in a short time frame, it is most likely to be generated by an AI.

AI systems struggle to grasp deep human feelings during a conversation. They can parrot simple emotional responses, but their responses are missing the deeper context and nuance that are a product of real-world experience. Human remarks are typically grounded in empathy, shared understanding, and emotional intelligence, whereas AI-created remarks might sound insincere or shallow. ORCUS analyzes sentiment to identify this difference, which enables it to distinguish real emotional responses from AI-created text.

The Birth of ORCUS AI

Faced with this problem while seeing so many AI generated comments, I developed ORCUS (Observational Recognition of Content with Unnatural Speech), an AI-driven solution designed to detect and flag AI-generated comments on LinkedIn. Named after the Roman god of the underworld, ORCUS stands as a digital guardian, working to uphold the integrity of online discourse by identifying AI-generated comments masquerading as genuine user interactions.

ORCUS’s development was inspired by the need to address the growing prevalence of AI content that pollutes social media. it aims to preserve authenticity and foster more meaningful engagement by providing LinkedIn users with a means of identifying AI-driven comments, empowering them to distinguish between real human interactions and automated responses. In doing so, ORCUS hopes to mitigate the negative impact of spam, ensuring that LinkedIn remains a platform for valuable, authentic, and insightful professional conversations.

How ORCUS Works

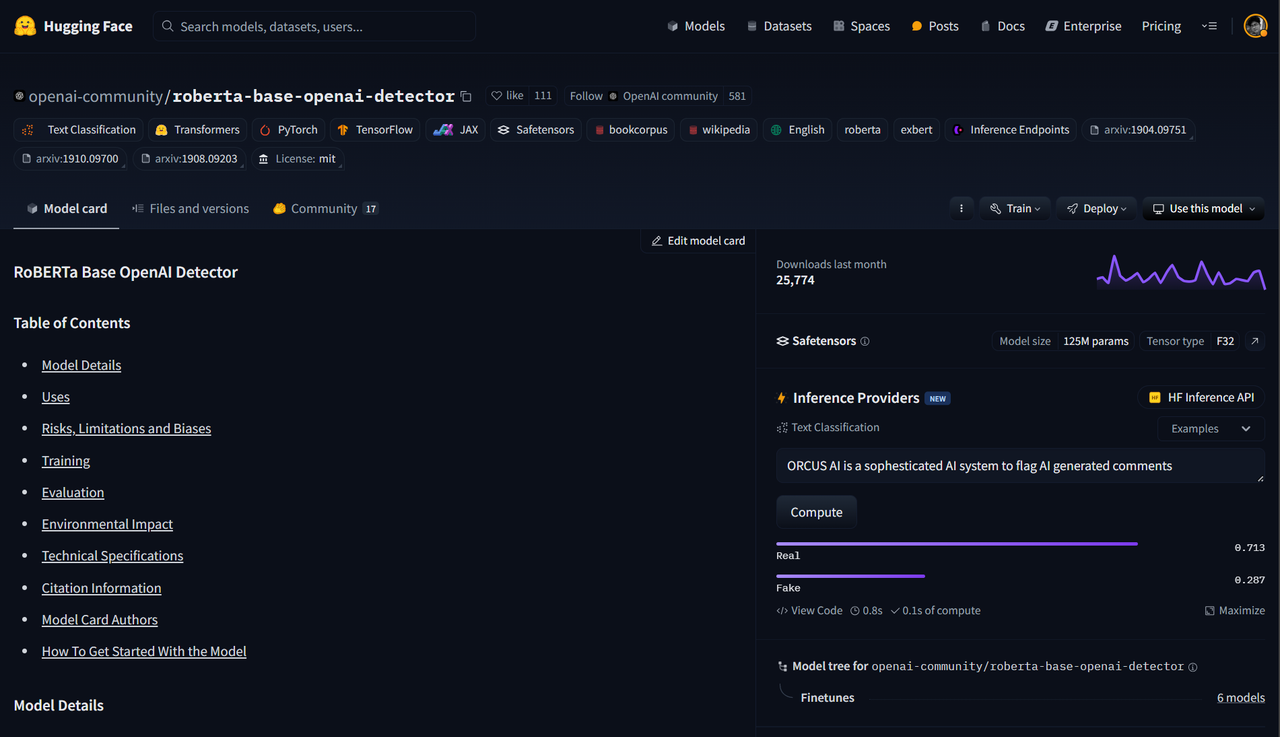

The core functionality of ORCUS revolves around its ability to analyze and classify comments based on their likelihood of being AI-generated. The tool uses advanced natural language processing (NLP) models, including the Roberta-Base-OpenAI-Detector Model (Yes, the same OpenAI that made ChatGPT) from Hugging Face, to assess the probability that a given comment is written by a human or an AI. By leveraging these sophisticated AI detection algorithms, ORCUS can accurately flag comments that are likely to be generated by AI tools.

The core functionality of ORCUS revolves around its ability to analyze and classify comments based on their likelihood of being AI-generated. The tool uses advanced natural language processing (NLP) models, including the Roberta-Base-OpenAI-Detector Model (Yes, the same OpenAI that made ChatGPT) from Hugging Face, to assess the probability that a given comment is written by a human or an AI. By leveraging these sophisticated AI detection algorithms, ORCUS can accurately flag comments that are likely to be generated by AI tools.

Once a comment is flagged, the system sends the data to GPT-2, a lightweight, Open-Source generation model which then crafts a personalized and engaging response. This includes an attention-grabbing opening, as well as detailed content highlighting the parts of the comment that seem the “most AI generated”. Additionally, GPT-2 identifies any patterns such as suspicious profile behavior, self-promotion, or other common triggers, providing a comprehensive report to the user and other people.

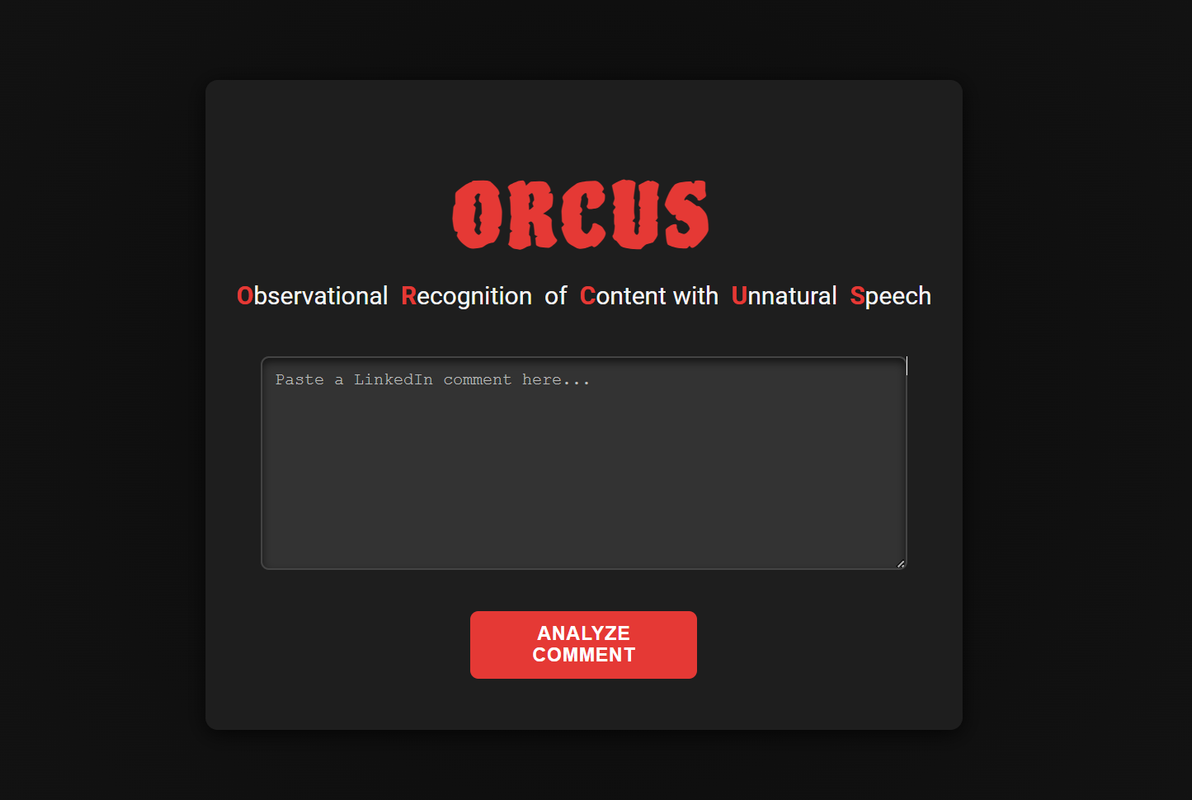

Users can access ORCUS through an easy, React-based interface that allows them to input any Social Media comment for analysis. Once the text is submitted, ORCUS scans the content in real-time and provides feedback on the likelihood that the comment was written by AI. Comments with high probability of being AI-generated (for instance, over 85%) are highlighted, with greater probabilities (over 95%) indicating a higher likelihood of being automated.

Users can access ORCUS through an easy, React-based interface that allows them to input any Social Media comment for analysis. Once the text is submitted, ORCUS scans the content in real-time and provides feedback on the likelihood that the comment was written by AI. Comments with high probability of being AI-generated (for instance, over 85%) are highlighted, with greater probabilities (over 95%) indicating a higher likelihood of being automated.

Some of the main features of ORCUS are:

-

AI Detection Algorithms: ORCUS employs sophisticated machine learning algorithms to detect AI-generated content. By examining various language features, such as sentence structure, word selection, and overall flow, ORCUS determines whether a comment is likely to have been written by a human or machine.

-

Real-Time Analysis: Users are able to scan any text quickly by inputting it into the system. ORCUS scans the text and provides a rating of authenticity in mere seconds, allowing users to easily scan through comments on LinkedIn.

-

Interactive and User-Friendly Interface: Built on React, the ORCUS interface is intuitive and simple to use, providing users with an enjoyable experience. The system is designed for tech-savvy individuals and non-technical users alike, offering a seamless means of detecting AI content.

-

Unique AI Detection Responses: When content generated by AI is highlighted, ORCUS generates custom responses based on GPT-2. These engaging alerts are supplemented with emojis and interesting words, providing a lighthearted, human touch to the detection process.

-

Community-Driven: ORCUS is not just a tool; it is part of a movement to reclaim online spaces from automated content. By inviting users to help promote authenticity, ORCUS seeks to establish a collective commitment to better conversations online.

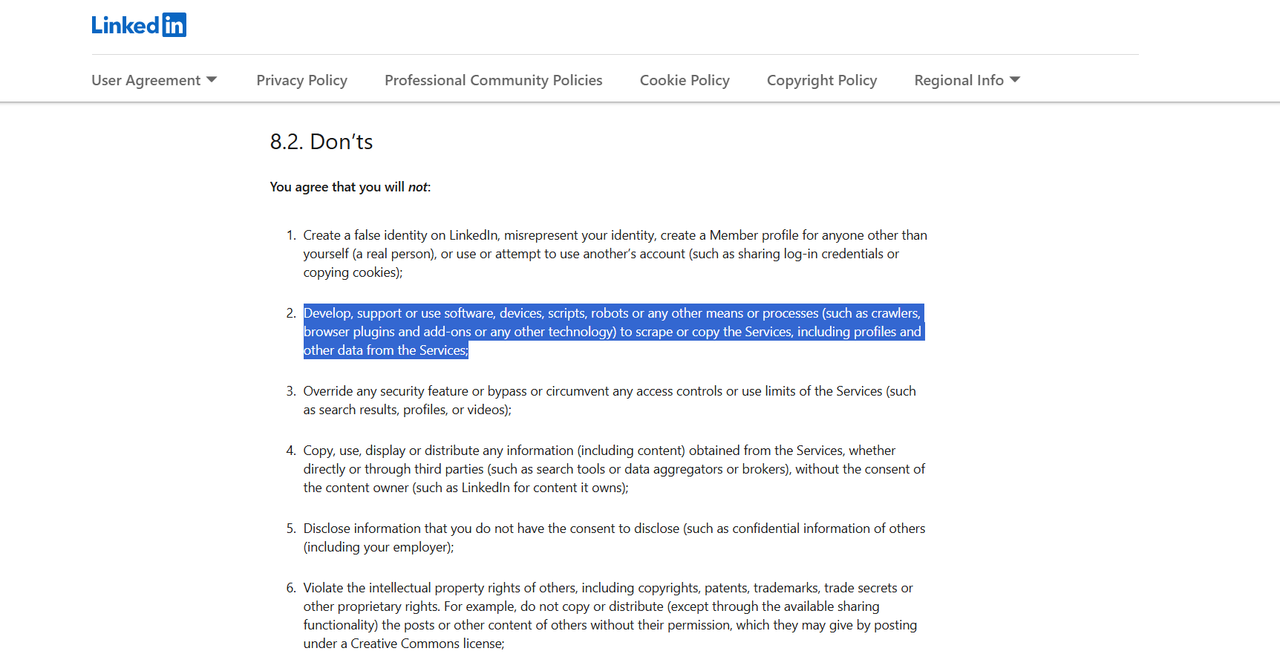

The Case Study: Running ORCUS for 24 Hours

Between January 7th and January 8th, I conducted a thorough case study to examine AI-generated comments on LinkedIn. I scanned through posts with popular hashtags such as Technology Tech AI MachineLearning and TechforGood. As LinkedIn lacks an official API and scraping with BeautifulSoup (BS4) is limited, I collected publicly available comments on these popular hashtags. Even without direct API support, I used a custom-built scraping script to collect a decent sample of data from these posts, although there were some limitations.

The analysis was conducted on my computer with an Intel Core i5 13th gen mobile processor and 8GB RAM. The configuration worked but had bottlenecks with performance while handling a lot of data scraping and real-time processing. Nevertheless, I was able to analyze approximately 1,124 comments and process them through ORCUS’ model to generate responses and send through comments.

Insights from ORCUS Report: AI Content in LinkedIn Comments (7/1/25 - 8/1/25)

The effectiveness of ORCUS is reflected in the results of its most recent analysis. Between Jan 7, 2025, and Jan 8, 2025, ORCUS scanned 1,124 comments across various LinkedIn posts. Of these:

- 713 comments (63.43%) were flagged as likely human content, with a probability of AI involvement below 65%.

- 411 comments (36.57%) were flagged as likely AI-generated, with over 65% probability, and of these:

- 285 comments (25.35%) showed a high likelihood of being AI-generated (over 85%).

Moreover, 95 replies (through GPT-2) were processed, of which 30 replies were sent back after being flagged.

Despite hardware constraints and difficulties in data collection, the study revealed plenty of AI activity on popular posts, 25.3% is a HUGE NUMBER, frankly, much more than what I expected as well. where automation tools typically perform well. The analysis enabled me to understand how AI-generated content influences LinkedIn conversations and indicated how vital tools such as ORCUS are to combat the proliferation of such content.

The final report, despite the limitations of the setup, revealed that AI-generated comments were degrading the quality of discussions on significant industry issues and surely ruining the platform as a whole.

There is a need for improved detection systems, if an 18 year old can build a tool like this using just Open-Source tools, what is stopping enormous platforms to implement something useful for themselves?

Challenges and Limitations

Despite its promising features, ORCUS faced several challenges that limit its reach and impact. LinkedIn doesn’t like bot accounts running around and collecting information. LinkedIn’s rate restrictions prevented ORCUS from conducting large-scale analyses, as it cannot access data from multiple comments in real-time without significant computational overhead. The tool is restricted by the platform’s API limitations, and scaling ORCUS to analyze a broader range of content requires more resources and access than is currently available. This is also why I would not keep ORCUS running on LinkedIn for large durations due to it being against the TOS.

Additionally, the computational power needed to run ORCUS’s advanced detection models can be resource-intensive. This poses scalability issues, especially when dealing with the sheer volume of comments on popular posts or across multiple accounts. As a result, ORCUS is somewhat constrained in its capacity to handle LinkedIn’s massive data flow without impacting its performance.

Conclusion: Restoring Integrity to LinkedIn Discourse

As LinkedIn continues to expand as a professional networking site, the need to preserve the integrity of its conversations cannot be emphasized enough. AI-generated content is a very real threat that can destroy the credibility of these exchanges. By providing a strong, real-time ability to detect AI-based spam, ORCUS helps play a key role in ensuring LinkedIn remains a site for thoughtful, human-driven conversation.

Though ORCUS has already achieved considerable success in meeting this challenge, the tool’s continued development, as well as the increasing cooperation of the LinkedIn community, will be critical in overcoming the hurdles of scalability and achieving broad adoption. Together, through increased awareness of this challenge and enhanced digital literacy, we can restore the legitimacy of online interactions, ensuring technology serves to enhance, not supplant, human imagination and interaction.

For more information or to become involved with ORCUS, visit the project’s GitHub repository: ORCUS on GitHub.